By MARGARET CRAIG-BOURDIN

For all the enormous potential artificial intelligence offers, it also poses short- and long-term risks. But with the right approach and framework, it’s possible to mitigate them.

Now that artificial intelligence seems here to stay, it’s bringing an almost limitless potential to push us forward as a society. In fact, one PwC study says AI could contribute $15.7-trillion to the global economy by 2030, as a result of productivity gains and increased consumer demand. And according to Dr. Anand S. Rao, global AI lead for PwC, AI’s transformative potential continues to be top of mind for business leaders: “A full 85 per cent of CEOs believe AI will significantly change the way they do business in the next five years,” he says.

But for all the enormous potential AI offers, it also poses short- and long-term risks. As Irene M. Wiecek, professor of accounting, teaching stream, at the University of Toronto and lead on the skills and competencies workstream for Foresight: Reimagining the Profession, as well as a member of the CPA Competency Map Task Force, puts it: “While creating many opportunities, AI is also presenting challenges for individuals, companies and, more broadly, for society. And it’s important for CPAs to be aware of those challenges if they want to provide the best possible advice to the companies and clients they serve.”

At a conference held jointly last year by the University of Toronto’s Master of Management and Professional Accounting program and CPA Canada, Rao took a look at some of the risks of artificial intelligence, and how businesses can deal with them by employing responsible AI. As Wiecek, who wrote the introduction to the conference report, points out, “Responsible AI has a lot to do with data governance—an area that CPAs, because of their legacy in assurance and standard setting, are well placed to address. That’s why Foresight has placed a focus on the profession’s potential role in that area.”

SIX TYPES OF RISKS

According to Rao, the risks AI poses can be broadly divided into six categories, all with varying impacts on individuals, organizations and society.

- Performance: As Rao points out, AI algorithms that ingest real-world data and preferences as input (such as movie recommendation algorithms) run the risk of learning and imitating our biases and prejudices. “For example, just because I bought a children’s book for my niece, the algorithm might keep recommending children’s books for me,” he says. “Also, the more complex the algorithms, the more error-prone they are. And then there is a risk of performance instability and opaqueness—in other words, you are unable to explain why the algorithm picked a specific movie or music track for you.”

- Security: For as long as automated systems have existed, humans have tried to circumvent them and it is no different with AI, says Rao. “In some cases, AI has expanded those risks exponentially. Apart from cyber intrusion risks, you have privacy and open-source software risks—not to speak of adversarial attacks.”

- Control: The more advanced the AI is, the more it exhibits “agency”, says Rao. “You’ve got the risk of AI going ‘rogue’–and of not being able to control malevolent AI.”

- Economic: The widespread adoption of automation across all areas of the economy could cause job displacement by shifting demand to different skills. Also, we could see power concentrated in the hands of a few large corporations or even just one.

- Societal: The widespread adoption of complex and autonomous AI systems could result in “echo-chambers” that can lead to broader impacts on human-to-human interaction. For example, some say people gravitate to social media because it confirms preexisting beliefs (confirmation bias). Societal risks include a risk of polarization of views and the risk of an intelligence divide.

- Ethical: AI solutions are designed with specific objectives in mind that could compete with the overarching organizational and societal values within which they operate. “Values could be misaligned or fall by the wayside altogether,” says Rao. “There is always a risk that data could be collected or used unethically or maliciously, as in deepfake videos. That’s one of the reasons why setting parameters around data collection and use—something Foresight is examining—is so important.”

FOUR TYPES OF INTELLIGENCE

To mitigate the risks of AI, we need to be careful about how AI is being used and should be used in the future. And that means developing a human-centred approach, says Rao.

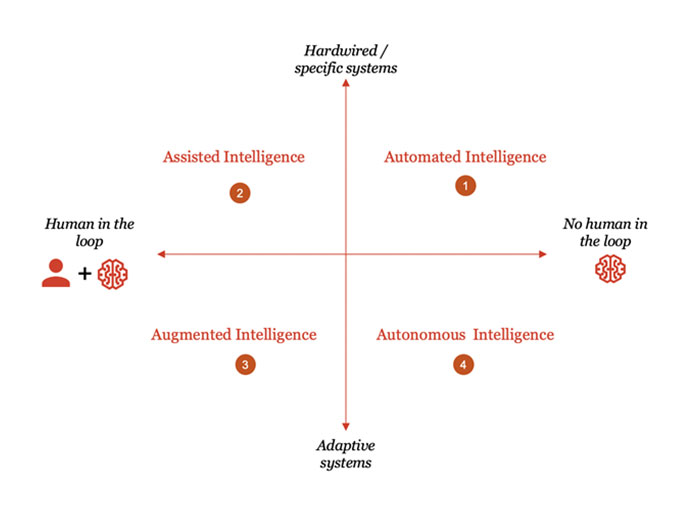

When developing such an approach, it’s useful to focus on two dimensions: how humans interact with AI and how the AI is interacting with the environment—i.e., the extent to which it adapts to changes. “If you plot these two dimensions on a graph,” says Rao, “you come up with four distinct ways in which AI is being used today and has been used in the past.”

- Automated intelligence: When manual or cognitive tasks that are repetitive or non-repetitive are sufficiently simplified and standardized, they can be automated. (This might include extracting tabular information from documents, for example.)

- Assisted intelligence: As Rao explains, there are a number of actions and/or decisions that require human judgement. “While the AI algorithm can do the number crunching, finding patterns and predictions, we still require humans to take the final decision or act based on the recommendations,” he says.

- Augmented intelligence: The more recent wave of advanced analytics and AI have focused on machine learning, says Rao. “As humans make judgments, often inconsistently and frequently irrationally, algorithms can learn about human judgements and factor them into its own recommendations.”

- Autonomous intelligence: Finally, there are some situations where we want the AI to be adaptive to the environment as well as operating without any involvement of humans. (Think, for example, of an autonomous car that can drive us wherever we want, in any weather conditions, without assistance from humans.)

As we go through these four types of intelligence—from automated to autonomous—we require progressively more scrutiny, governance and oversight, because the risks increase significantly, says Rao. “That’s why the jump from augmented intelligence to autonomous intelligence requires both a technical leap and also social acceptance.”

Rao adds that with human-centred AI, we stay within the confines of assisted and augmented intelligence. “That’s where the human is still in the loop,” he says.

DEVELOPING A RESPONSIBLE AI FRAMEWORK

Given the risks and challenges inherent in the use of artificial intelligence, it’s imperative for organizations to consider them when designing and deploying AI applications, says Rao. That’s why PwC has developed a responsible AI framework that can be used as a guide. “The framework includes several key dimensions that encompass everything from values to control,” he says.

Ethics and regulation: Organizations must not only comply with regulations but also adopt some of the key ethical principles of data and AI and embed them in the design of their AI systems.

Bias and fairness: While it is impossible to arrive at a decision that is fair to all parties, AI systems can be designed to mitigate unwanted bias and arrive at decisions that are fair under a specific and clearly communicated definition, says Rao.

Interpretability and explainability: Organizations must endeavour to demystify or explain how a decision was arrived at and how the model works to engender trust in users.

Robustness: Organizations must ensure that the AI system’s performance is robust and that small changes in input do not produce large variations in performance.

Security and safety: Organizations must ensure that the AI systems are secure and cannot be hacked.

Privacy: Organizations must ensure that the data used by the AI systems and the insights generated preserve and protect the privacy of individuals and other legal entities.

Governance and accountability: To minimize risk and maximize ROI, organizations must introduce enterprise-wide and end-to-end accountability for AI applications, data and data use and consistency of operations.

As Rao points out, a human-centred approach to AI, combined with a framework like the one above, can provide a way for organizations to benefit from the many upsides of AI, while mitigating the risks. “And CPAs are well positioned to play a major role in every single dimension of that framework,” he says.

AI-CURIOUS?

For more on AI, see the summary report of the 2019 MMPA conference, and consider attending the 2020 MMPA conference, being held virtually on November 20. The theme for this year’s event is “AI and machine learning for complex business decision making.” The conference is sponsored by CPA Canada and is open to all CPAs.

To learn more about Foresight, CPA Canada’s initiative to reimagine the future of the accounting profession, see the interactive platform, where you can contribute to important discussions on topics such as data and value creation. And visit CPA Canada’s resources on data management, including Canada’s economy and society are becoming more digital and Making sense of data value chains.

This article was first published on 11.17.2020 by CPA Canada.